Introduction

In this tutorial you will build a tool to quickly run R scripts from Shinkai, and use it to easily interact with your scripts text outputs via a LLM. You will learn how to :- use an executable path to run another software from Shinkai

- use LLMs to interact with your R scripts text outputs

- build the tool using Shinkai AI-assisted tool builder

- code the tool

- integrate error logging at each step

Prerequisites

Before starting this tutorial :- Install and open Shinkai Desktop

- Install R

- Create an R project with at least 1 script

- Note these 3 paths : Rscript executable, R script to run, R project root directory

Part 1 : Building a R script executor tool using Shinkai AI-assisted tool builder

Shinkai offers an effortless tool building experience thanks to its AI-assisted tool builder, where even libraries dependencies and tool metadata are handled automatically. In the tool creation UI :- select a performant LLM (e.g., shinkai_code_gen, shinkai_free_trial, gpt_4_1)

- select a programming language (we’ll use Python in this tutorial)

- write a prompt describing the tool well and execute it

- the task the tool should accomplish and how

- what you would want in configuration versus inputs

- how to handle errors

Prompt

Part 2 : Full code for a R script executor tool

The tool does the following :- sets up configuration, input, and output classes

- checks if R executable, script, and project directory exist

- switches to the project directory

- prepares and runs the R script using subprocess

- captures outputs and errors from the script

- returns to the original directory

- returns the results (success/failure, output, errors)

Full Code

- Give concrete examples for inputs and configurations. Be super explicit (e.g. example of a R script file path). It helps users and yourself know or recall the precise formats (or values, options, etc.) required or possible.

- Pick useful keywords.

- Write a clear but thorough tool description.

Metadata

Part 3 : Using the tool, interacting with R scripts text outputs from Shinkai

First, enter your Rscript executable path in the tool configuration.

- select an adequate LLM : a performant LLM able to understand complex and potentially long context (especially if executing advanced R scripts), or a tailored LLM specifically performant on the type of outputs your R script creates

- type ’/’ to access the list of available tools

- select the R Script Executor tool

- add your 2 inputs paths : R script and R project root directory

- add a prompt to interact with the text outputs of the R script

- press ‘enter’ or click on the arrow to send

- The R script itself

- The text outputs of its execution (results and eventual errors)

- “I am trying to learn how this R script functions. Explain me its global process, and then each steps more in details.”

- “Based on this R script and its execution results, what steps could I add to get more detailed error logs ?”

- “Remind me which paramaters are used for ‘given task/step/function/etc.’ in this R script. And based on the results suggest better parameters.”

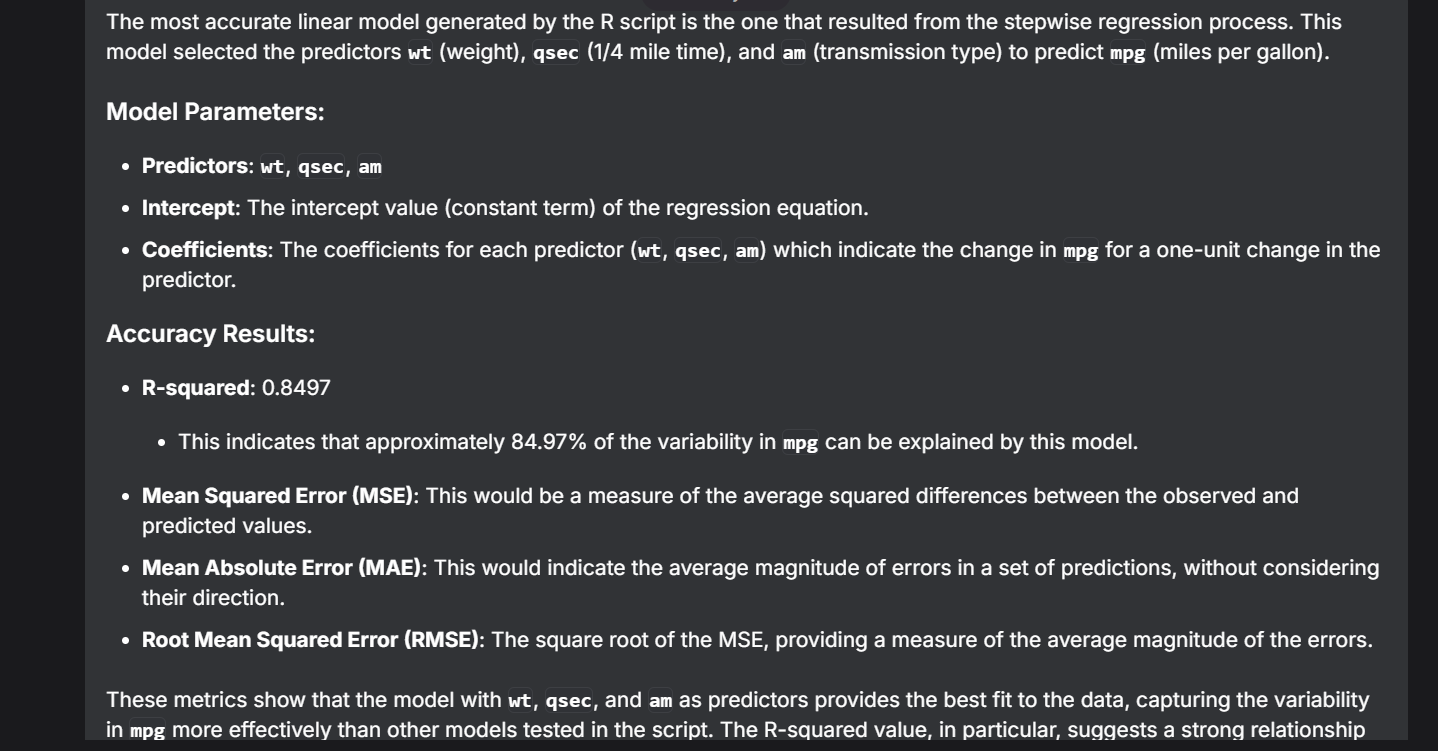

- “Among all the generated models by this R script, which one is the most accurate ? Show me its parameters and accuracy results.”

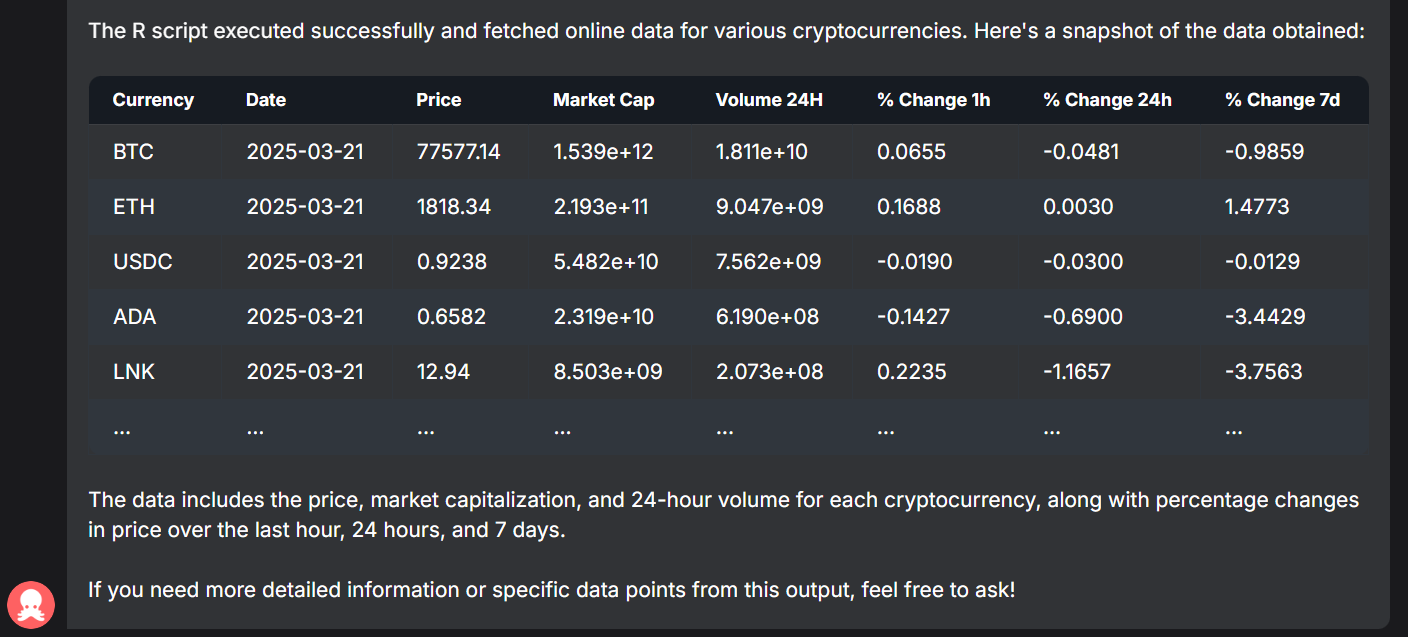

- Any question about the data the script outputs in the console.

-

Answering which linear model is the best :

-

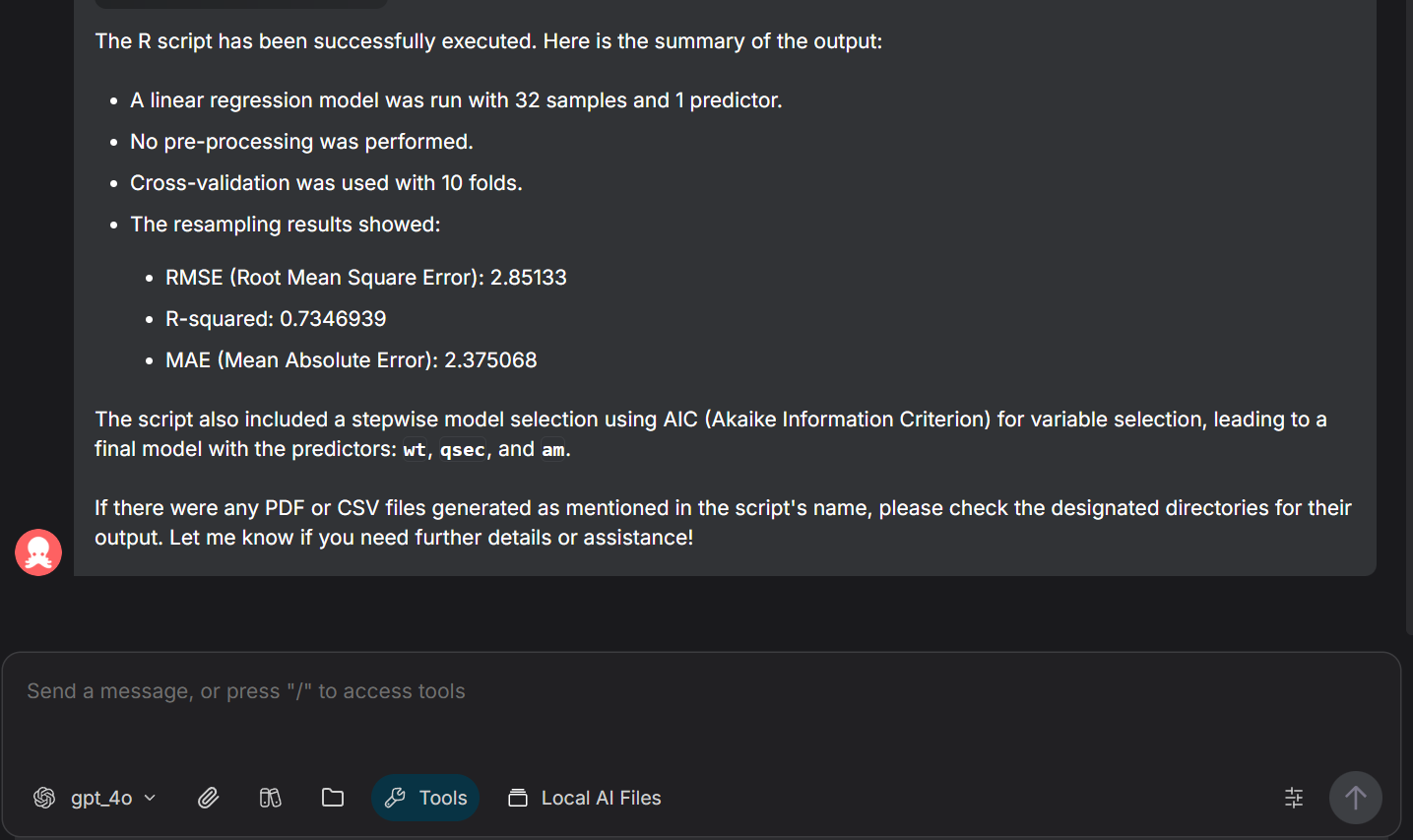

Summarizing :

-

Showing an extract of the data :

-

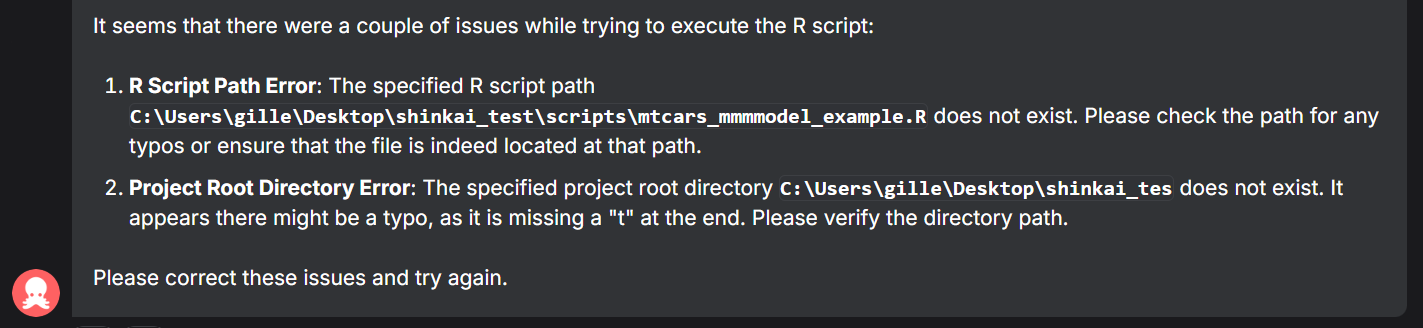

Error handling :